Testing Freepik Spaces: Node-Based canvases are taking over AI Video

Everyone is moving to a canvas. Is this new workflow actually better? A first-look review and test drive.

Forget your old workflow.

The era of surfing feeds for generated videos and images, stitching together different AI tools, downloading, uploading, re-prompting, upscaling, is (thankfully) coming to an end.

The new meta? Node-based canvases.

If you’ve felt that AI video creation is getting chaotic, you’re not wrong. Just four months ago, the big debate was about JSON prompts (spoiler: they weren’t the revolution we hoped for). Now, the entire industry is pivoting to a visual, node-based workflow.

This “canvas” approach, pioneered by platforms like Flora, is now the feature everyone is rushing to copy. Krea has “Nodes” in a closed beta, ImagineArt is working on “Workflows,” and Runway is building its own.

It’s a clear signal: this is the new standard for serious creators.

The latest player to enter the ring is Freepik, with its new “Spaces.” I’ve spent the last few days putting it through its paces to see if it’s just a copycat or a genuine contender.

What is Freepik Spaces?

In short, Freepik Spaces is a cloud-based, infinite canvas designed to be an all-in-one creative environment.

It’s not just one tool; it’s an integrator. It pulls all of Freepik’s AI tools (image generation, video creation, audio, retouching, upscaling) into a single collaborative map. The entire idea is to build, automate, and share complex creative workflows in one place, replacing the “tool-hopping” that plagues all of us.

But does it work? Let’s find out.

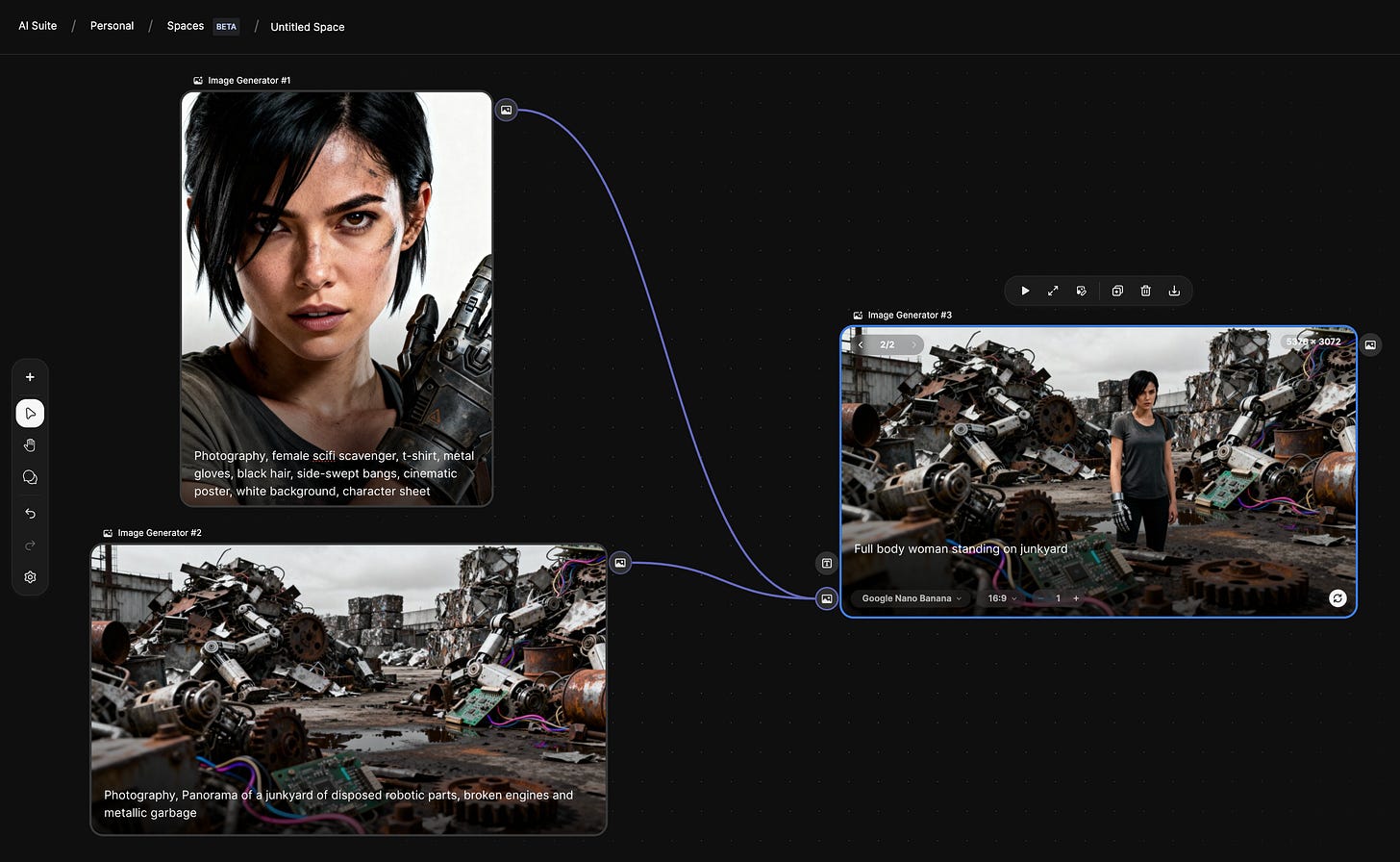

The Test Drive: A simple “Character-to-Scene” workflow

I wanted to start with a common, practical task: create a consistent character, animate them, and upscale the result.

In the old workflow, this would be at least three separate screens. In Spaces, I was able to build it in about two minutes.

Freepik offers multiple templates, but I started from scratch to understand the logic.

Your Node 1 is like any prompt box you already know, you can select the model for generate an image/video, aspect ratio, and amount of generations.

I can then generate two separate images using the following prompts:

Photography, female scifi scavenger, t-shirt, metal gloves, black hair, side-swept bangs, cinematic poster, white background, character sheet

and

Photography, Panorama of a junkyard of disposed robotic parts, broken engines and metallic garbage

Now, I want to merge these two images, place my character on the setting using Nano Banana:

Full body woman standing on junkyard

That’s how nodes work. They give you a clear visualization of your process.

The Verdict: Is this workflow any good?

Yes. Emphatically, yes.

There are two advantages that are clear to me about this workflow:

Freepik Spaces allows collaboration. I can share the link to this space and another person can join and work together with me on this project. This is very powerful. Collaboration is the reason Figma became the powerhouse it is now. While the idea of lone directors operating is romantic, making serious AI Videos is still a team effort.

Freepik Spaces allows iteration. In the above workflow, if I decide the character is blonde, I just need to modify one image, and then regenerate the end shot. Then imagine this applied to longer workflows and scenes.

Node-based canvases are not a fad. They are the logical (and necessary) next step for serious AI video creation. This is one to watch closely.

Of course, not everyone is happy.

What do you think? Let me know in the comments.