Luma expands Dream Machine AI video model into full creative platform

All the news from the AI world for creatives

Good day, creatives

In today’s Newsletter:

1️⃣ Luma Labs takes AI workflows one step forward

2️⃣ Runway announces Frames

3️⃣ Sora leaked

4️⃣ Most people can’t identify AI Art. Take the test.

5️⃣ Steal this Midjourney sref

Let’s go.

Luma Labs takes AI Video Creation one step forward with Dream Machine upgrade

The world of AI-driven video creation is about to take another leap forward, thanks to Luma Labs' latest update to its Dream Machine platform.

This major upgrade introduces Photon, a new image model designed to bring unmatched speed, creativity, and collaboration to the table, alongside an entirely revamped approach to generative AI video creation.

Let’s unpack all the info:

Idea-to-Video: A New Era of AI Creativity

When Dream Machine launched in June, it was already a game-changer, offering faster generations, improved motion, and innovative features like looping, prompt enhancement, and keyframes.

Now, Luma Labs is taking it to the next level.

The latest iteration aims to make idea-to-video creation more seamless, intuitive, and collaborative than ever before.

This is part of the trend we commented two weeks ago: Platforms are going full stack, allowing users to be able to have their full workflow in a single platform.

With competition in the space heating up, Luma Labs is stepping up its game.

Meet Photon: The Star of the Upgrade

The centerpiece of this update is Photon, a new text-to-image model. Built on Luma's Universal Transformer architecture, Photon is faster than the previous model. And it doesn’t just power Dream Machine; it’s also available as a standalone tool for generating realistic, versatile images.

How it compares to leading models like Flux and Midjourney? Let’s take a quick test with a prompt including text:

Prompt: Latin woman smiling in a windy open space with a blue sky in the background and some clouds, holding a sign written in ballpoint pen on a notebook saying “This is an example to test out the new model"

With its latest upgrade, Luma Labs looks to streamline AI workflows with faster, smarter, and more collaborative tools.

Runway announces Frames

Trying to steal the thunder from Luma, Runway unveiled Frames, their newest image generation model focused on unprecedented stylistic control and consistency (No release date, though).

Style mastery: The model excels at maintaining specific aesthetics across multiple generations while allowing creative exploration.

Diverse worlds: Showcases 10 distinct “worlds”, including 1980s SFX Makeup, Japanese Zine aesthetics, and Magazine Collage styles.

Controlled rollout: Being gradually released through Gen-3 Alpha and Runway API with built-in safety measures and content moderation.

Why this is important:

Frames shows how the conversation in the AI world is shifting from “can AI generate good images?” to “can AI maintain artistic vision?”. The ability to establish and maintain a specific aesthetic across multiple generations is crucial for creative professionals who need consistency for bigger projects. AI is slowly but surely becoming a truly reliable creative partner rather than just a tool for one-off generations.

Sora leaked

More drama from OpenAI: A group of "early testers, red teamers, and creative partners" has leaked OpenAI's Sora AI video model via Hugging Face to protest what they say is unpaid development work. The group says OpenAI asked hundreds of artists to test the system, find errors, and provide feedback without fair compensation.

Here's what you need to know:

The leaked version generates 10-second, 1080p videos and looks like a faster, "turbo" variant. The code also suggests style controls and restricted customization options.

Many Sora generated videos surfaced on X following the leaks. However, the leaked frontend stopped working after few hours, likely due to OpenAI revoking access.

The leak was part of a protest by artists who claim OpenAI exploited them for unpaid testing and feedback, providing only "artistic credibility" in return.

They also criticized OpenAI for tightly controlling early access to Sora, requiring content sharing approval, favoring select creators, and misrepresenting the model's true capabilities. Here’s the full letter.

Why this is important:

The Sora leak highlights growing tensions between artists and AI developers over fairness, transparency, and the ethics of creative contributions in AI projects.

Can you identify AI Art? Take the test

Scott Alexander, in his blog Astral Codex Ten, conducted a test to see if people can distinguish between art created by humans and that generated by AI. The results revealed that we are not very good at it. Take the test here and share your results.

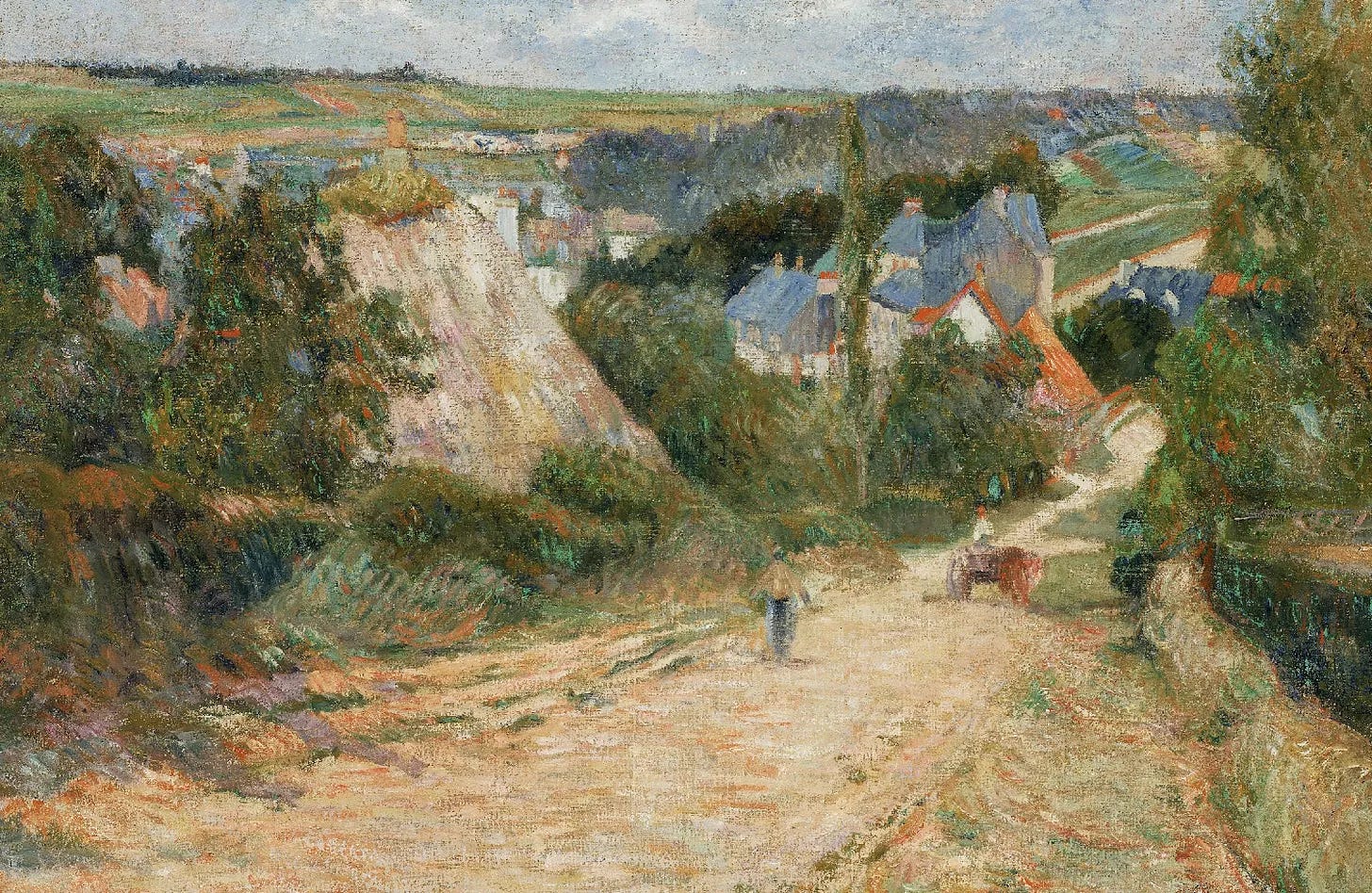

Steal this Midjourney sref

To use it, add --sref 2550566787. at the end of your prompt in Midjourney. Your images will be generated with this style.