Luma Ray 3 is here: Testing the AI model that thinks in video

A deep dive into Luma's new engine, from its game-changing Draft Mode and HDR output to its ability to understand visual commands beyond the prompt

Remember when AI video was a novelty of wobbly, inconsistent clips? You’d spend ages crafting the perfect prompt, only to get a result where a character grows a third arm or the camera loses its mind.

That era of frustration might be over (let’s try to keep the hype down).

Just months after Ray 2, Luma has unleashed Ray 3, and it’s an interesting upgrade. Billed as the world's first reasoning video model, it’s now available for free in Dream Machine, and it promises to solve some of biggest headaches that have kept AI video out of professional workflows.

Let’s explore what it offers and then we’ll put it to the test.

What problems does Ray 3 actually solve?

Before we get to the flashy examples, let's talk about the core improvements that target real-world creative pain points.

Pain Point: Iteration is painfully slow and costly.

Ray 3's Solution: Draft Mode.

This is a very nice improvement. You can now generate low-res previews in seconds, allowing you to rapidly test ideas, camera angles, and concepts without burning through credits or waiting minutes for each render. The UI aligns your test variations in an horizontal flow, and new explorations in a vertical flow. This is very handy. Once you nail the shot, a single click upscales it to production-ready 4K HDR. It’s 5x faster, 5x cheaper, and lets you stay in a creative flow.

Pain Point: AI doesn't really understand my complex vision.

Ray 3's Solution: Visual Reasoning.

Prompting can feel like a guessing game. Ray 3's reasoning engine allows it to understand more complex, multi-step directions. Even better, it can interpret visual annotations. You can now literally draw or scribble on an image to direct an actor's performance, block a scene, or guide the camera's movement, all without typing a single extra word.

To tell you the truth, I’m still learning how to use this feature to the full. I sense there is promise here, but can’t seem to fully grasp it.

Pain Point: The final output still looks "AI-generated" and flat.

Ray 3's Solution: Studio-Grade HDR.

This is the feature that bridges the gap to professional use. Ray 3 natively generates video in 10, 12, or even 16-bit high dynamic range. This means incredible detail in both shadows and highlights and vivid, accurate color.

Pushing the Limits: The Tests

Talk is cheap. We put Ray 3 through three difficult challenges to see if it could handle the nuance, speed, and coherence required for real-world use.

Test 1: Can it act?

The subtle expression of human emotion is often where AI fails. We started with this Midjourney image of a woman and gave Ray 3 a simple, yet difficult, acting direction.

Prompt: Portrait of a woman --chaos 10 --ar 16:9 --exp 10 --sref 3892014345 --stylize 1000Let’s ask the girl for some tears.

woman crying, tears in her eyes, sorrow, sadness, pov shot, photorealistic styleResult: The AI staple of weird, glitchy tears is still a minor hurdle. But look past that, the performance is stunning. The subtle shift in her expression, the sorrow in her eyes, the clenching of her jaw... it’s a believable, Oscar-worthy performance that conveys genuine emotion.

Test 2: High-speed VFX & world cohesion

Next, let's try something with blistering speed and a complex environment. Can the model maintain object consistency while moving at high velocity through changing scenery?

Prompt: A cinematic still of a quick tiny starship flying across a massive futuristic space city in block shape in deep space, captured in a dramatic low-angle wide shot, heavy lens flare, saturated blues and oranges, subtle atmospheric haze, bold contrast, in the style of 2025 Filmsupply, shot on IMAX, golden hour lighting, trending color grading --chaos 10 --ar 16:9 --exp 20 --sref 2901713362 4138069935 680574490 --sw 300 --stylize 1000We want the ship to fly into a tunnel.

Fast motion camera chasing a black starship flying over a futuristic grim city, drone shot of the starship as it enters a futuristic tunnel at hyperspeedResult: Incredible. The shot is dynamic, the motion blur is realistic, and the lighting is cinematic. Most importantly, the starship remains a consistent object as it transitions perfectly from flying over the city to entering the tunnel.

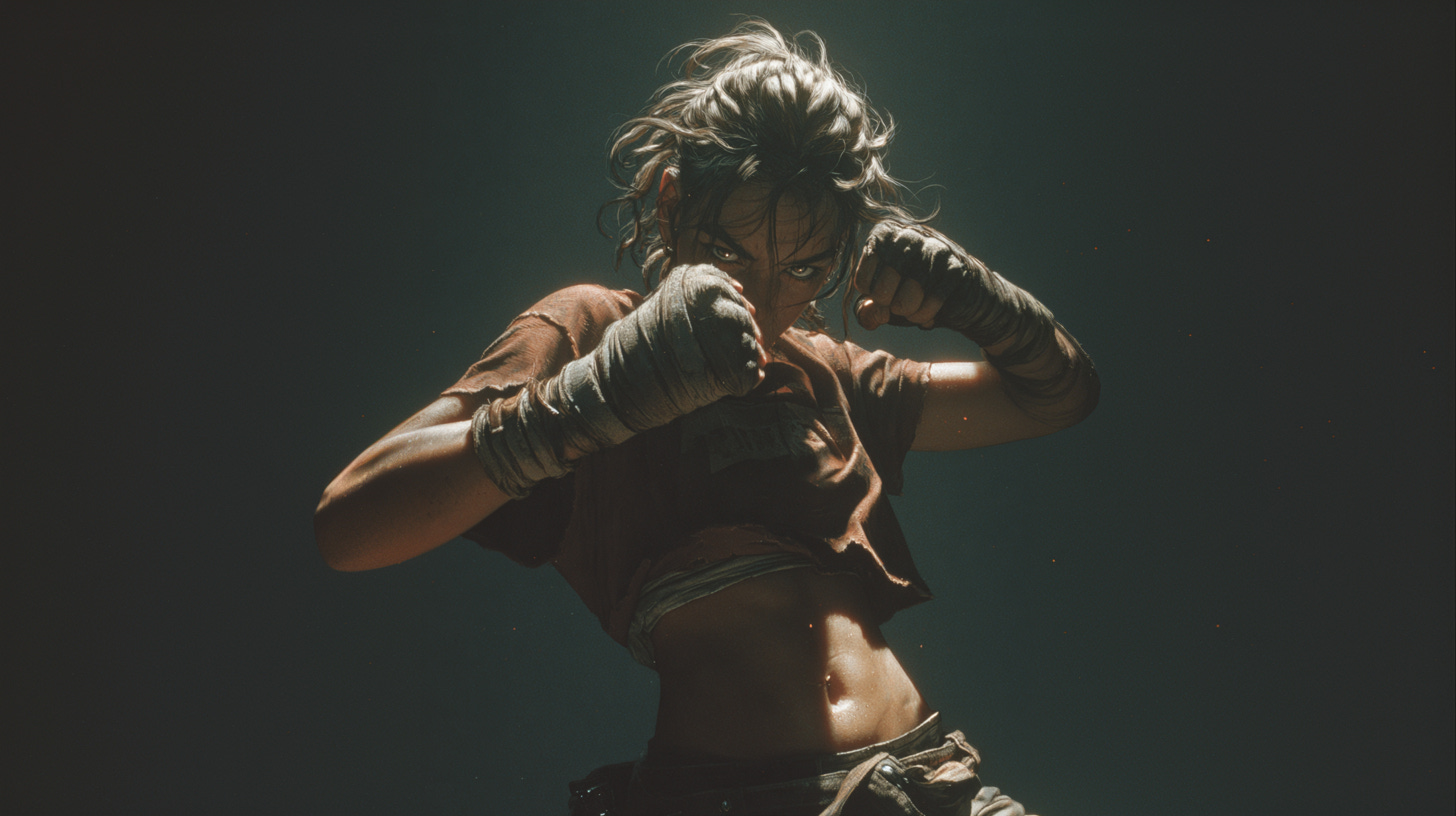

Test 3: The ultimate challenge - a coherent fight scene

This is where most models completely fall apart. Fast, chaotic motion, character interaction, and maintaining a consistent form during a fight is the ultimate test.

Photography, close up, female scavenger in closed fighting position, fists in front of the face, wearing athletic ragged t-shirt, screaming, hair flowing, lean build, hand wraps visible, high contrast, cinematic mood, motion, dynamic motion, close up intensity, blur, action, bokeh, fire, sparks, depth of field, urban background with explosions --chaos 10 --ar 16:9 --exp 20 --sref 2901713362 4138069935 680574490 --sw 300 --stylize 1000Let’s watch the girl throw some punches.

photorealistic style, medium shot, robo arm camera of women throwing punches and being hit in the face, gritty, fast and dramaticResult: Whoa. The dynamism and speed of this shot are electric. The camera movement feels intentional and powerful, like a high-end robotic arm rig. While the action is almost too fast to perfectly track character consistency, the motion is coherent, impactful, and free of the grotesque morphing that typically plagues AI-generated fight scenes. This feels like a real action sequence.

Final Thoughts: We're in the Game

Luma Ray 3 is a great upgrade, and I applaud its focus on solving core creator problems: slow workflows, imprecise control, and subpar final quality.

The barrier to creating cinematic, high-fidelity visuals is crumbling. What will you create first?