Pixverse's new feature puts you in the Director's Chair

Master the new 7-keyframe feature to gain precise control and speed up your creative workflow.

Is your AI Video workflow just a painful game of dominoes?

You have the perfect storyboard laid out. A sequence of still images that maps out your entire scene, frame by glorious frame. The vision is complete.

So why does turning it into one smooth video feel like such a chore?

You know the drill. You upload your first two images, the start and the end of your first action. You hit "generate," you wait. Then, you start the painful cycle all over again: download the clip, grab the last frame, pair it with your third image, and generate the next tiny segment. And again. And again.

Why do AI video creators love dominoes? Because we're used to setting things up one piece at a time just to watch it all come together... eventually.

What if you could stop the tedious frame-by-frame slog? What if you could lay out your entire visual sequence at once and generate the whole thing in a single, fluid shot?

Pixverse just rolled out a feature that shatters this painful, two-frame limit. It puts the power squarely back in your hands with the ability to use up to seven of your own keyframes.

So, what's the Big Deal?

To understand why seven frames is a massive leap, let's look at the current landscape:

The Standard: Most image-to-video tools let you use one starting frame. It's a "one and pray" approach.

The Advanced: High-end tools like Kling and Luma's Ray2 allow for a start and end frame, giving you a bit more control over the trajectory.

The Contender: Runway's Gen-3 alpha impressed users by allowing three guide frames.

Pixverse just blew past them all with a whopping seven frames. This isn't just an incremental update; it's a fundamental change in how we can direct AI video.

How to take back control: A quick guide

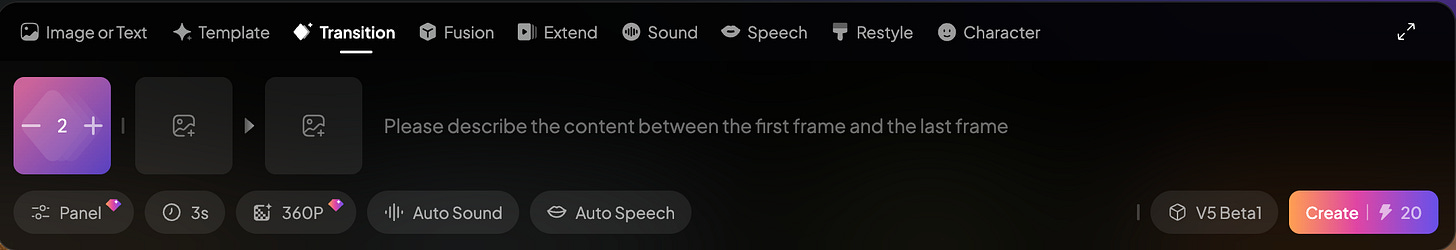

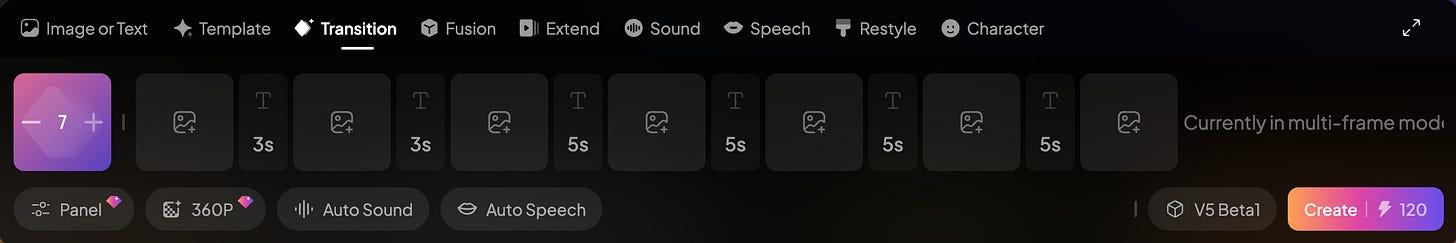

The interface is clean and intuitive. When you first open it, you'll see two slots: a starting point for your creative journey.

But here's where the magic begins. See that little plus sign? Keep clicking it.

You can add up to seven distinct frames, creating a visual storyboard for your entire shot right inside the generator.

But wait, there's more.

By clicking the text icons between each frame, you can open a prompt box. Here, you can describe the action that should happen between those two frames and even control the duration of that specific transition.

Why this improves the workflow for creators

This feature directly solves two of the biggest headaches in AI video creation:

1. Unprecedented Control (The Director's Cut) The frustration of AI "going rogue" is real. With seven anchor points, you are no longer just suggesting a direction; you are dictating the path. You can meticulously plan a complex camera pan, guide a character's specific movements, or ensure an object transforms exactly as you envision. It's the closest we've come to having a digital cinematographer who actually listens.

2. Blazing-Fast Workflow (The Efficiency Boost) What was your old workflow? Generate a 4-second clip, pray the last frame is usable, use it as the first frame of the next clip, and repeat. This tedious, frame-by-frame process is slow and often results in jarring transitions. Now, you can define an entire, complex sequence in one go and let Pixverse generate the full, coherent shot in a single pass. More creating, less stitching and re-rolling.

Ready to see it in action? Let's dive into some examples of what this new level of control can do.

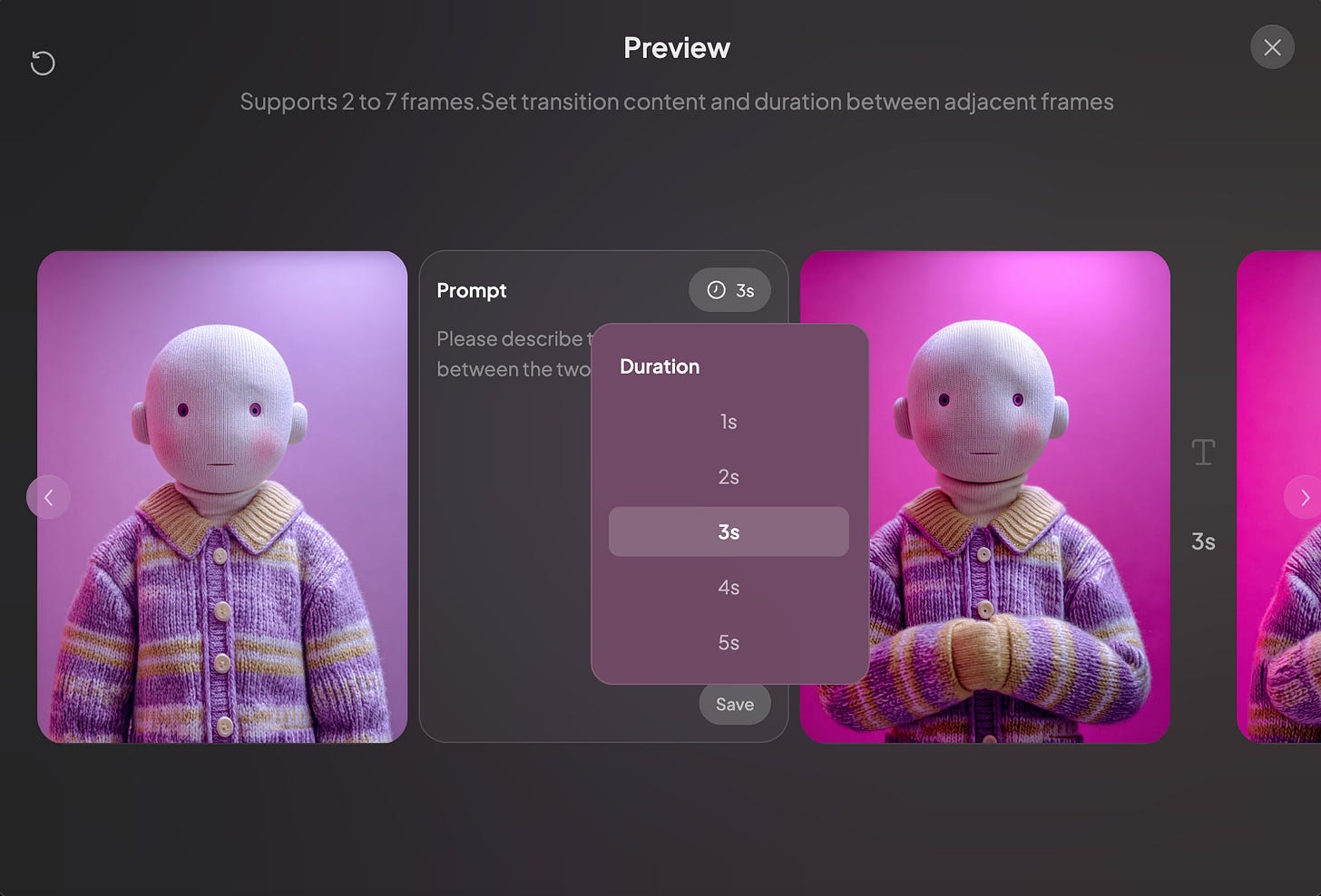

First test: How are the transitions?

Let’s use a few abstract shapes and watch how it blends them together.

Initial Midjourney prompt: Transparent Tensor Core with a galaxy inside, isometric, visible complex energy circuits, isometric view, holographic product design, maximalism, in the style of Teenage Engineering, Spacepunk, transparent surfaces, plain colored background, levitating, suspended mid-air, studio lighting, hyper-realistic, Unreal Engine, Octane render, Zbrush --chaos 10 --ar 3:4 --exp 10 --sref 257047628 --stylize 1000

And here is the result:

Four images, three transitions done in fast track. Nice.

Second test: How about control?

Now we will text how much control we can get, if we have the defined frames.

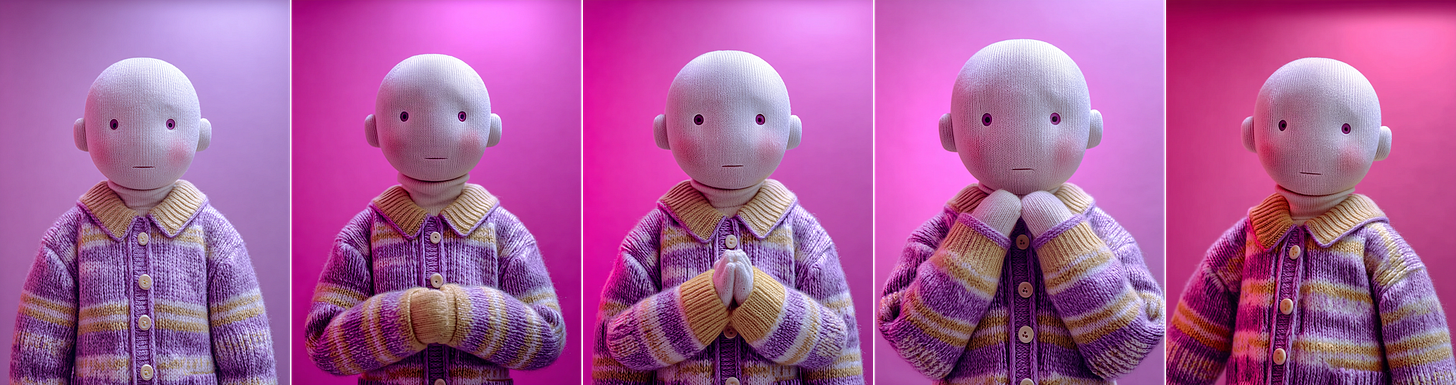

Initial Midjourney prompt: A bald doll wearing a purple and yellow striped sweater showing hands, against a solid color background with pink tones, in a simple design, with a close-up front view, soft lighting, and a cute expression, creating a warm atmosphere. --ar 3:4 —chaos 10 --sref 3386211438

--sw 300 --s 1000 --v 7

And here is the result:

It goes for all the steps, and in the spare time, it adds more elements. Some fine tuning in the time between frames and it should be good enough.

Third test: How about some storytelling?

Finally, let’s try some images with the basic draft of a story, and see what Pixverse can deliver.

Initial Midjourney prompt: a lone figure emerging from a colossal cracked, obsidian egg, shimmering with cosmic nebula patterns inside. The background is a stark, dark landscape under a sky filled with distant galaxies. a single vibrant phoenix feather drifts down. Cinematic lighting, hyperdetailed, sense of profound rebirth. --chaos 10 --ar 16:9 --exp 10 --raw --sref 2159285406 --profile ifaowni --sw 300 --stylize 1000

And here’s the result:

Not bad.

These are tests done quickly, but the results are very good.

I’m firmly convinced more frames allow more speed and control, and I welcome Pixverse’s efforts in that direction.

What do you think? Were these examples useful to you? Please let me know.