Your best AI Image is no longer a creative trap with Seedream 4.0

How ByteDance's new AI, Seedream 4.0, improves the character consistency problem and turns your best images into dynamic video scenes

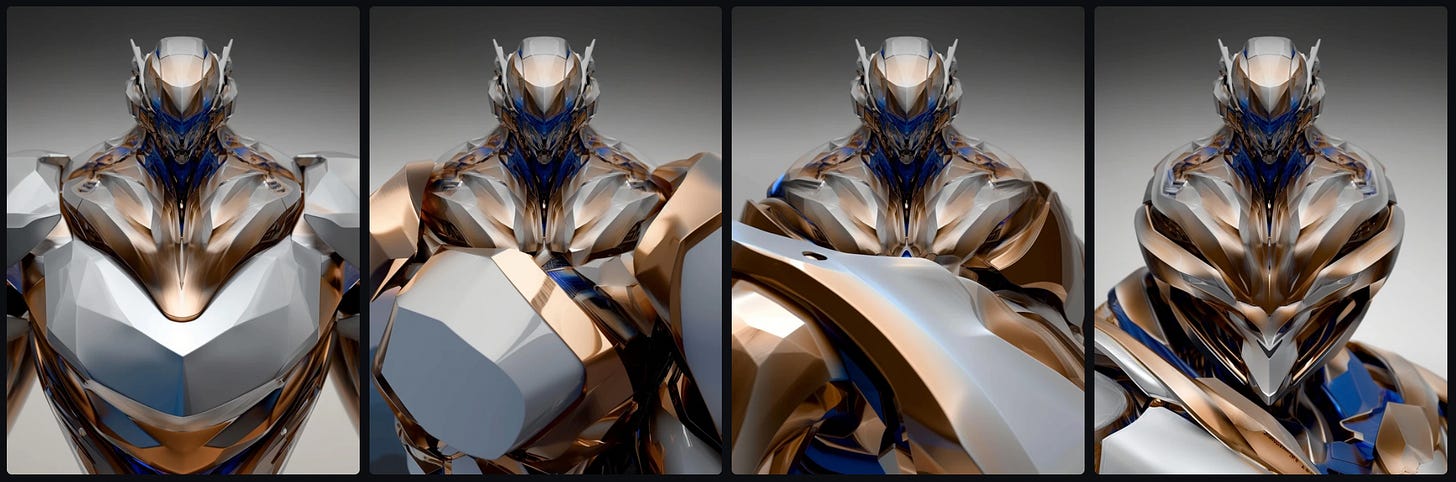

Sometimes, it just clicks. There, in the middle of all those generations, you have the perfect character, just there, looking at you. A stunning person, a detailed mecha, a breathtaking scene.

And then… you’re stuck.

What do you do with it? Trying to edit the same character into a full body shot often leads to frustratingly inconsistent results.

And don’t get me started on using it as a character reference in Midjourney. Your masterpiece is trapped as a single, static image. That creative dead end is one of the biggest headaches in AI art.

Until now.

A new tool for a new workflow

The game is changing, and fast. We love tools like Nano Banana, and now, a powerful new contender has just arrived from ByteDance (the company behind TikTok). It’s called Seedream 4.0, and it's not just another image generator, it's a part of that fundamental shift in how we can create.

Released in early September 2025, Seedream 4.0 is a multi-modal AI that unifies image generation and editing into one seamless process. It can produce stunningly detailed 4K visuals in seconds, but its true power lies in its ability to understand and iterate on an existing image with incredible precision.

In other words, TikTok’s Nano Banana.

This breaks down the barrier that has held so many of us back: creating a cohesive set of visuals from a single concept.

Let's walk through the new mindset:

From a single image to a full scene

I’ll show you exactly what this means with a practical example.

Step 1: Generate Your "Hero" Image

I started in Midjourney and created this image of a mecha. I was happy with the design, but it was just one shot.

Prompt: photography, armored core 6 mech design, flat background --chaos 10 --ar 3:4 --exp 25 --sref 2262264725 --profile 5n281qz --sw 300 --stylize 1000

Under the old mindset, this is where the struggle began. Now, this is where the fun starts.

Step 2: Enter "Seedream Mode"

Instead of trying to fight with Midjourney for variations, we switch tools. I uploaded my mecha image to an AI platform that integrates Seedream 4.0 (I use Freepik, but others work just as well).

Then, using my original image as the source, I generated a series of new shots with simple, descriptive prompts. The key is that Seedream understands the core subject and can reimagine it from any perspective.

I used variations of these prompts to get different angles:

Full body shot of mecha, ground view, non-symmetrical

Ultra wide, close up of mecha, extreme angle, full body

Ultra wide, back view, extreme angle, full body visible

The result? A set of perfectly consistent shots of the exact same mecha from different viewpoints, something that would have been nearly impossible a few months ago.

Step 3: Assemble and Animate

With a collection of consistent stills, the final step is easy. You can use an AI video generator’s Frame-to-Frame feature to create smooth motion between them (In this case, I used Hailuo, but any tool with Frame-to-Frame works).

Add some sound design, and you’re done.

You’ve successfully turned a single, static image into a dynamic video sequence.

The implications are huge. We no longer have to think in terms of single generations. We can now design a character or object once and then direct a full photoshoot for it. This is a workflow that respects your initial creative spark and gives you the power to expand on it limitlessly.

What other broken workflows will tools like this fix? I'd love to hear your thoughts.